|

|

|

|

IIT Kanpur

2011-2016 |

UMass Amherst

Summer 2015 |

UIUC

2016-2022 |

CMU & Meta

2022-present |

|

|

|

|

Uber ATG

Summer 2017 |

Allen Institute for AI

Summer 2018, 2020 |

FAIR (collab w/ UT Austin)

Summer 2019, FA19, SP20 |

Google DeepMind

Summer 2021, FA21 |

| Dec 2023 |

Organizing the community-building workshop 'CV 20/20: A Retrospective Vision' at CVPR 2024. |

| Sep 2023 |

Serving as Area Chair for CVPR 2024. |

| Jun 2023 |

Organizing the 'Scholars & Big Models: How Can Academics Adapt?' workshop at CVPR 2023. |

| Jan 2023 |

Serving as Area Chair for NeurIPS 2023. |

| Dec 2022 |

Organizing the RoboAdapt Workshop at CoRL 2023. |

| Sep 2022 |

Serving as Area Chair for CVPR 2023. |

| Mar 2022 |

Started at Meta AI & CMU w/ Abhinav, Deepak, and Xinlei -- diving into embodied learning (from & for humans), representations, and robotics. |

+ older (PhD) news

|

|

![[NEW]](./unnat_jain_files/new.png) Exploitation-Guided Exploration for Semantic Embodied Navigation

Exploitation-Guided Exploration for Semantic Embodied Navigation

Justin Wasserman, Girish Chowdhary, Abhinav Gupta, Unnat Jain

ICRA 2024

Best Paper at NeurIPS 2023 Robot Learning Workshop

paper |

project |

code

|

|

|

![[NEW]](./unnat_jain_files/new.png) Habitat 3.0: A Co-Habitat for Humans, Avatars and Robots

Habitat 3.0: A Co-Habitat for Humans, Avatars and Robots

Xavi Puig*, Eric Undersander*, Andrew Szot*, Mikael Cote*, Ruslan Partsey*, Jimmy Yang*, Ruta Desai*, Alexander Clegg*, Michal Hlavac, Tiffany Min, Theo Gervet, Vladimír Vondruš, Vincent-Pierre Berges, John Turner, Oleksandr Maksymets, Zsolt Kira, Mrinal Kalakrishnan, Jitendra Malik, Devendra Chaplot, Unnat Jain, Dhruv Batra, Akshara Rai**, Roozbeh Mottaghi**

ICLR 2024

paper |

project |

code

Media:

|

|

|

![[NEW]](./unnat_jain_files/new.png) An Unbiased Look at Datasets for Visuo-Motor Pre-Training

An Unbiased Look at Datasets for Visuo-Motor Pre-Training

Sudeep Dasari, Mohan Kumar Srirama, Unnat Jain*, Abhinav Gupta*

CoRL 2023

project |

pdf

|

|

|

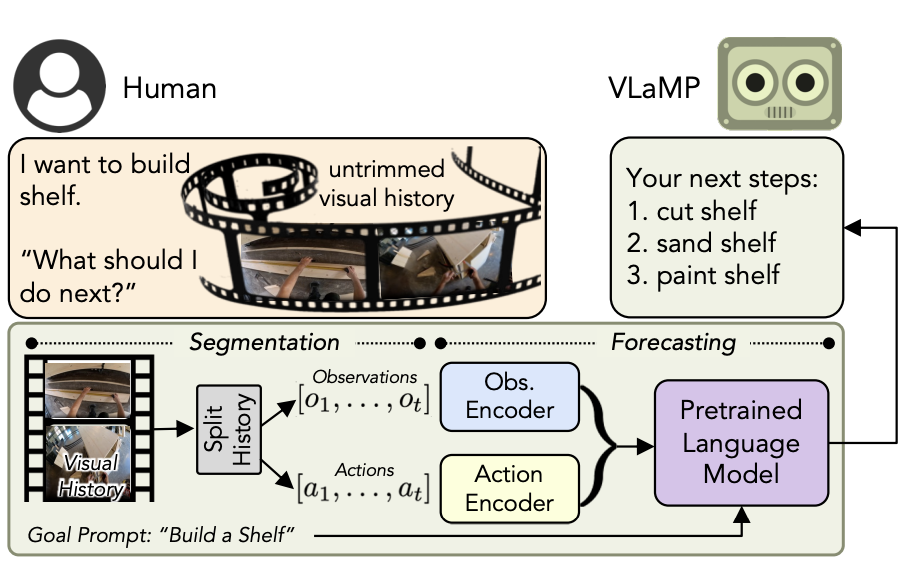

![[NEW]](./unnat_jain_files/new.png) Pretrained Language Models as Visual Planners for Human Assistance

Pretrained Language Models as Visual Planners for Human Assistance

Dhruvesh Patel, Hamid Eghbalzadeh, Nitin Kamra, Michael Louis Iuzzolino, Unnat Jain*, Ruta Desai*

ICCV 2023

paper |

code

|

|

|

![[NEW]](./unnat_jain_files/new.png) Adaptive Coordination in Social Embodied Rearrangement

Adaptive Coordination in Social Embodied Rearrangement

Andrew Szot, Unnat Jain, Dhruv Batra, Zsolt Kira, Ruta Desai, Akshara Rai

ICML 2023

paper |

code

|

|

|

![[NEW]](./unnat_jain_files/new.png) Affordances from Human Videos as a Versatile Representation for Robotics

Affordances from Human Videos as a Versatile Representation for Robotics

Shikhar Bahl*, Russell Mendonca*, Lili Chen, Unnat Jain, Deepak Pathak

CVPR 2023

paper |

project

Media:

|

|

|

![[NEW]](./unnat_jain_files/new.png) MOPA: Modular Object Navigation with PointGoal Agents

MOPA: Modular Object Navigation with PointGoal Agents

Sonia Raychaudhuri, Tommaso Campari, Unnat Jain, Manolis Savva, Angel X. Chang

WACV 2024

paper |

project |

code

|

|

|

Last-Mile Embodied Visual Navigation

Justin Wasserman*, Karmesh Yadav, Girish Chowdhary, Abhinav Gupta, Unnat Jain*

CoRL 2022

paper |

project |

code

|

|

|

Retrospectives on the Embodied AI Workshop

Matt Deitke, Dhruv Batra, Yonatan Bisk, ... Unnat Jain ... Luca Weihs, Jiajun Wu

arXiv 2022

paper

|

|

|

Learning State-Aware Visual Representations from Audible Interactions

Himangi Mittal, Pedro Morgado, Unnat Jain, Abhinav Gupta

NeurIPS 2022

paper |

code

|

|

|

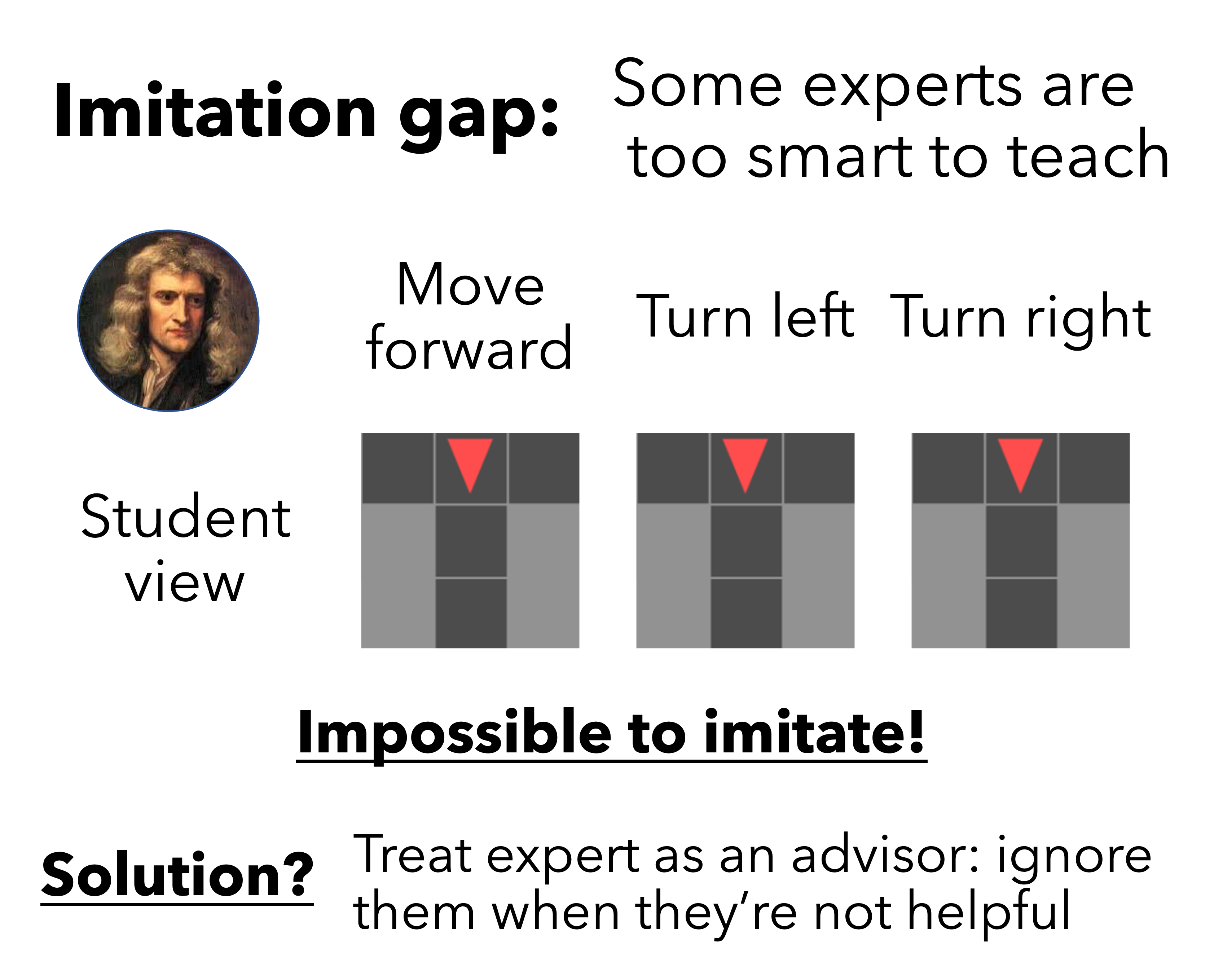

Bridging the Imitation Gap by Adaptive Insubordination

Luca Weihs*, Unnat Jain*, Iou-Jen Liu, Jordi Salvador, Svetlana Lazebnik, Aniruddha Kembhavi, Alexander Schwing

NeurIPS 2021

paper |

project |

code

|

|

|

Language-Aligned Waypoint (LAW) Supervision for Vision-and-Language Navigation in Continuous Environments

Sonia Raychaudhuri, Saim Wani, Shivansh Patel, Unnat Jain, Angel X. Chang

EMNLP 2021 (short)

paper |

project |

code

|

|

|

GridToPix: Training Embodied Agents with Minimal Supervision

Unnat Jain, Iou-Jen Liu, Svetlana Lazebnik, Aniruddha Kembhavi, Luca Weihs*, Alexander Schwing*

ICCV 2021

paper |

project

|

|

|

Interpretation of Emergent Communication in Heterogeneous Collaborative Embodied Agents

Shivansh Patel*, Saim Wani*, Unnat Jain*, Alexander Schwing, Svetlana Lazebnik, Manolis Savva, Angel X. Chang

ICCV 2021

paper |

project |

code

|

|

|

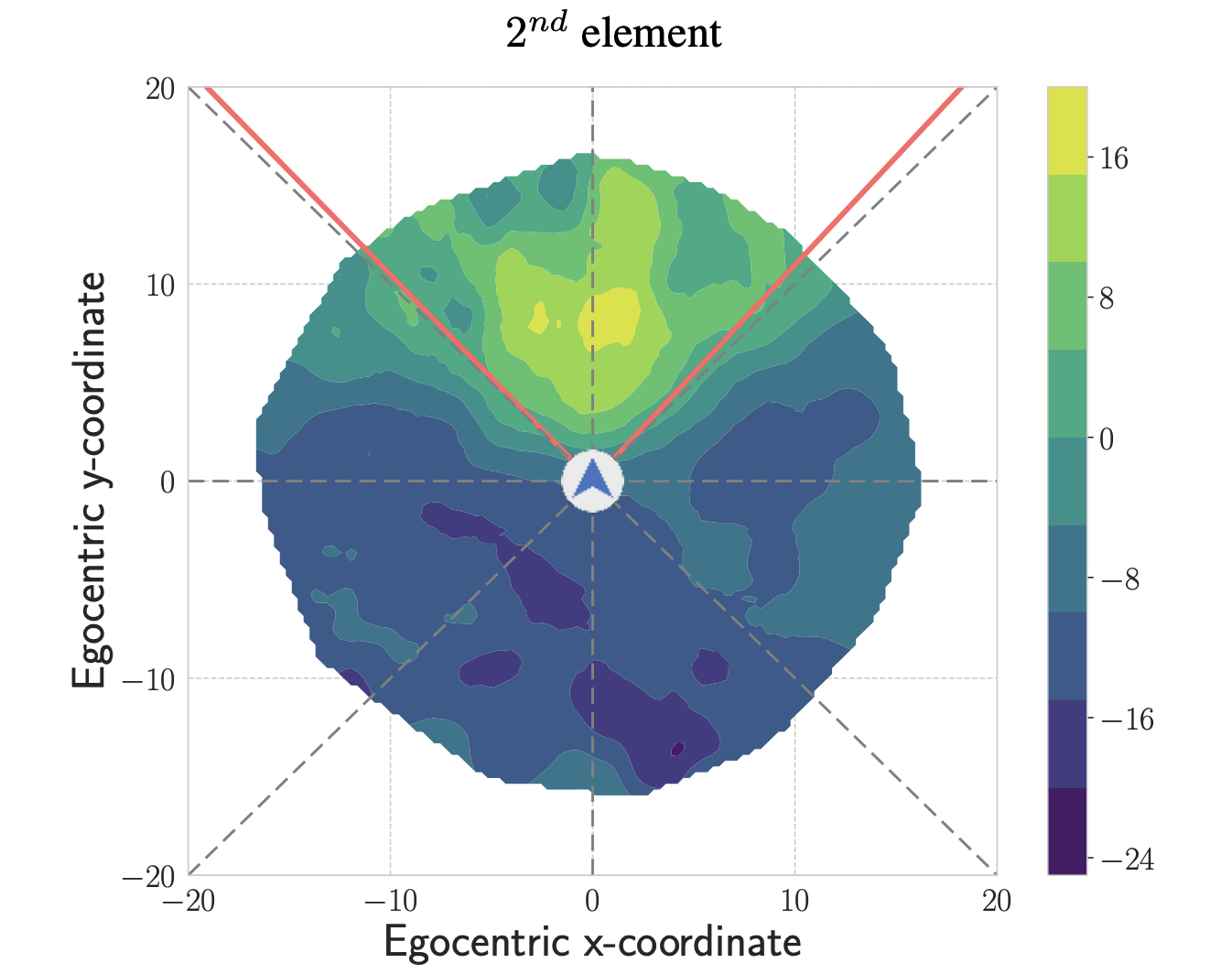

Cooperative Exploration for Multi-Agent Deep Reinforcement Learning

Iou-Jen Liu, Unnat Jain, Raymond Yeh, Alexander Schwing

ICML 2021 (long oral)

paper |

project |

code

|

|

|

MultiON: Benchmarking Semantic Map Memory using Multi-Object Navigation

Saim Wani*, Shivansh Patel*, Unnat Jain*, Angel X. Chang, Manolis Savva

NeurIPS 2020

paper |

project |

code |

challenge

|

|

|

AllenAct: A Framework for Embodied AI Research

Luca Weihs*, Jordi Salvador*, Klemen Kotar*, Unnat Jain, Kuo-Hao Zeng, Roozbeh Mottaghi, Aniruddha Kembhavi

arXiv 2020

paper |

project |

code

Media:

![[NEW]](./unnat_jain_files/media/venture-beat-logo.png)

![[NEW]](./unnat_jain_files/media/hackster-logo.png)

![[NEW]](./unnat_jain_files/media/aim.png)

|

|

|

A Cordial Sync: Going Beyond Marginal Policies For Multi-Agent Embodied Tasks

Unnat Jain*, Luca Weihs*, Eric Kolve, Ali Farhadi, Svetlana Lazebnik, Aniruddha Kembhavi, Alexander Schwing

ECCV 2020 (spotlight)

paper |

project |

code

|

|

|

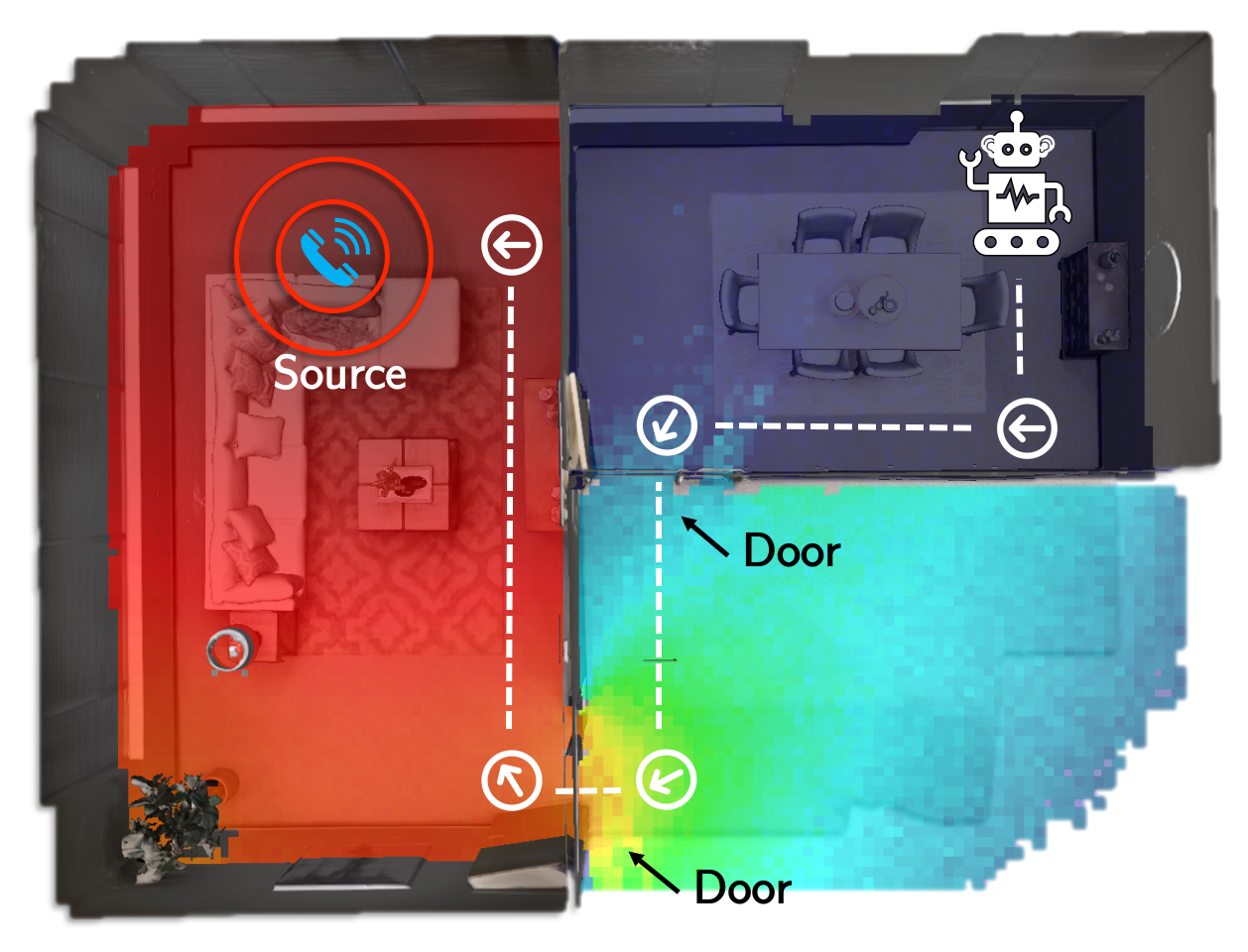

SoundSpaces: Audio-Visual Navigation in 3D Environments

Changan Chen*, Unnat Jain*, Carl Schissler, Sebastia Vicenc Amengual Gari, Ziad Al-Halah, Vamsi Krishna Ithapu, Philip Robinson, Kristen Grauman

ECCV 2020 (spotlight)

paper |

project |

code |

challenge

Media:

|

|

|

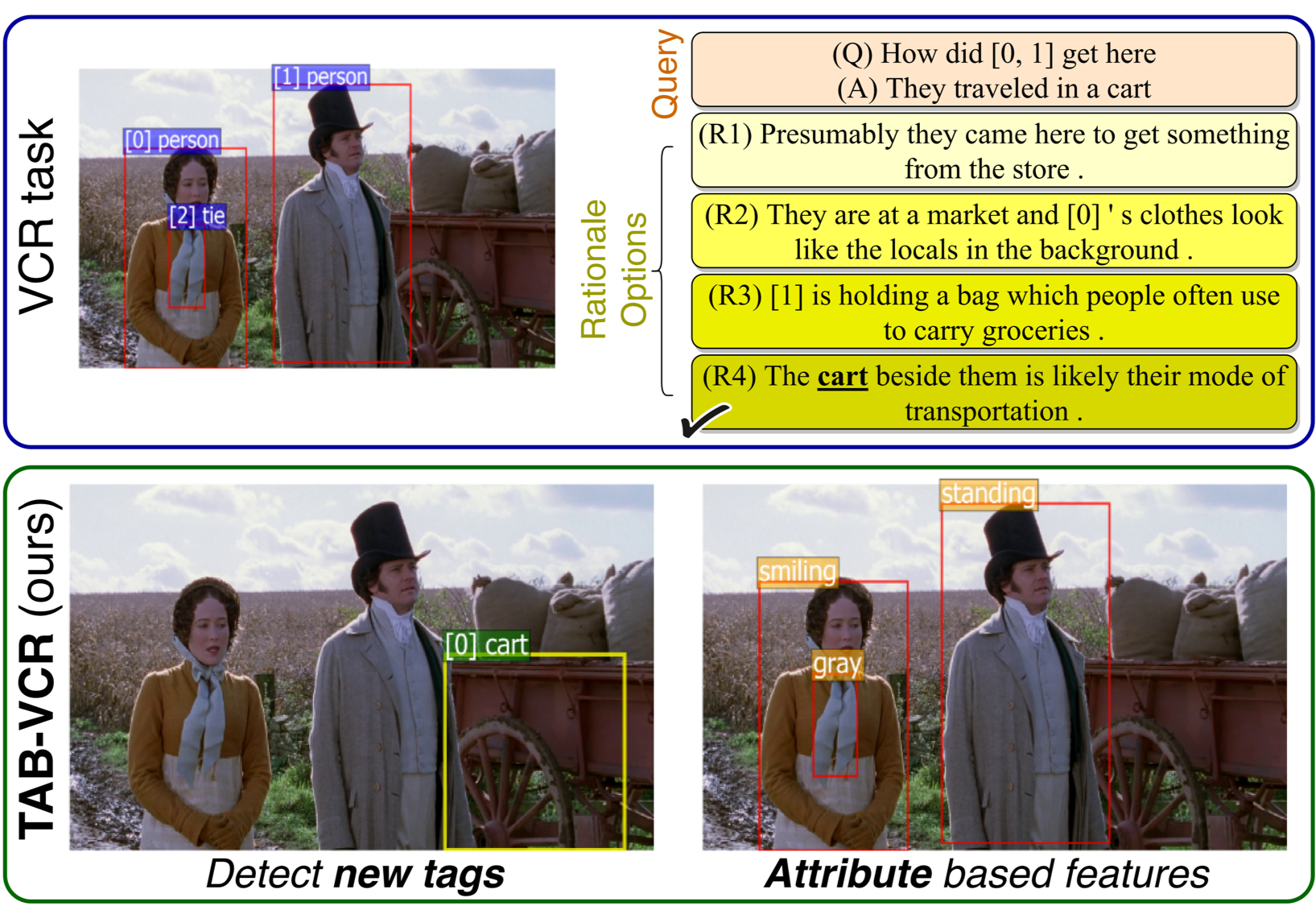

TAB-VCR: Tags and Attributes based VCR Baselines

Jingxiang Lin, Unnat Jain, Alexander Schwing

NeurIPS 2019

paper |

project |

code

|

|

|

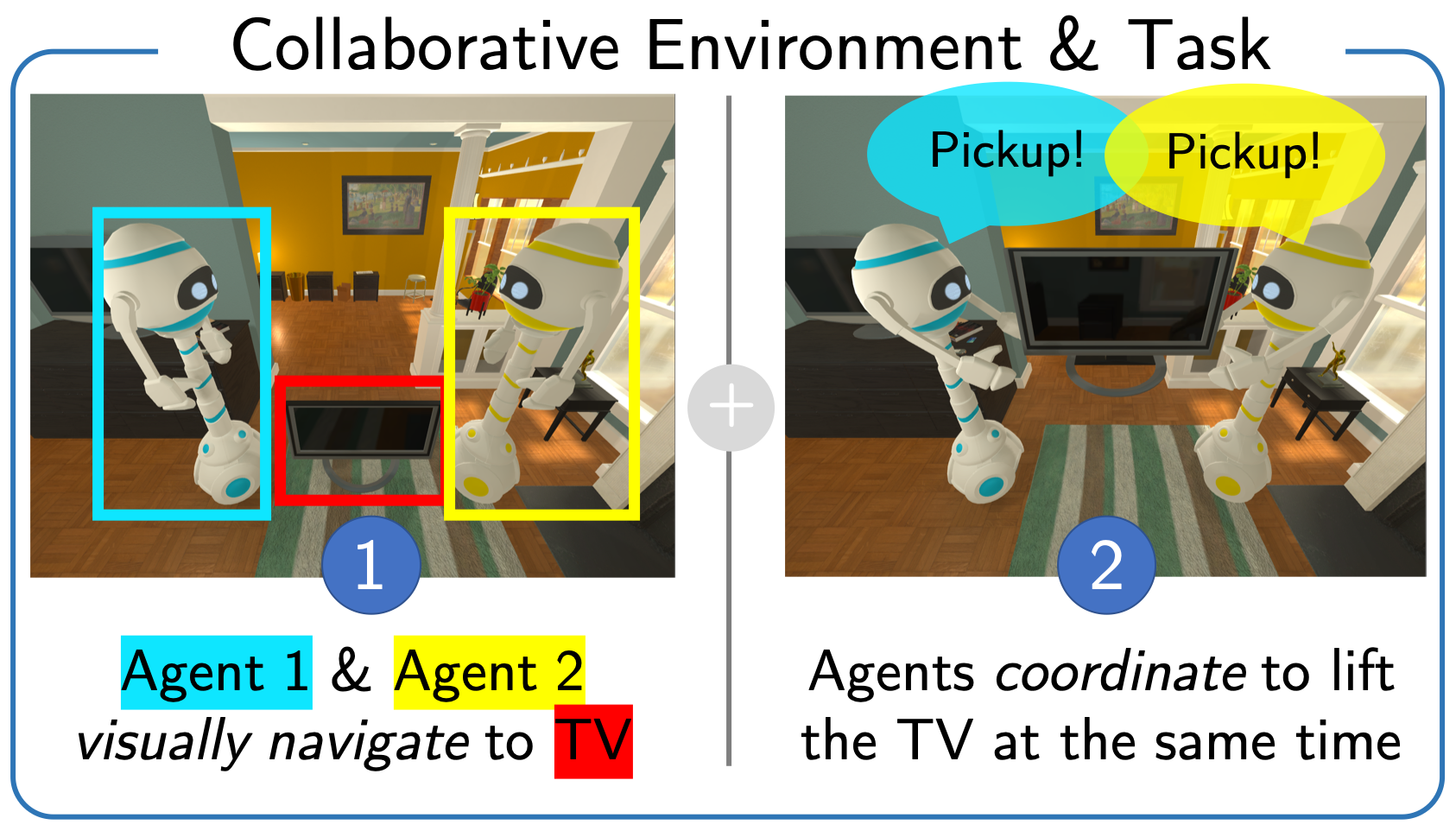

Two Body Problem: Collaborative Visual Task Completion

Unnat Jain*, Luca Weihs*, Eric Kolve, Mohammad Rastegari, Svetlana Lazebnik, Ali Farhadi, Alexander Schwing, Aniruddha Kembhavi

CVPR 2019 (oral)

paper |

project |

code

Talk @ Amazon:

video,

ppt,

pdf

Talk @ CVPR'19:

video,

ppt,

pdf,

poster

|

|

|

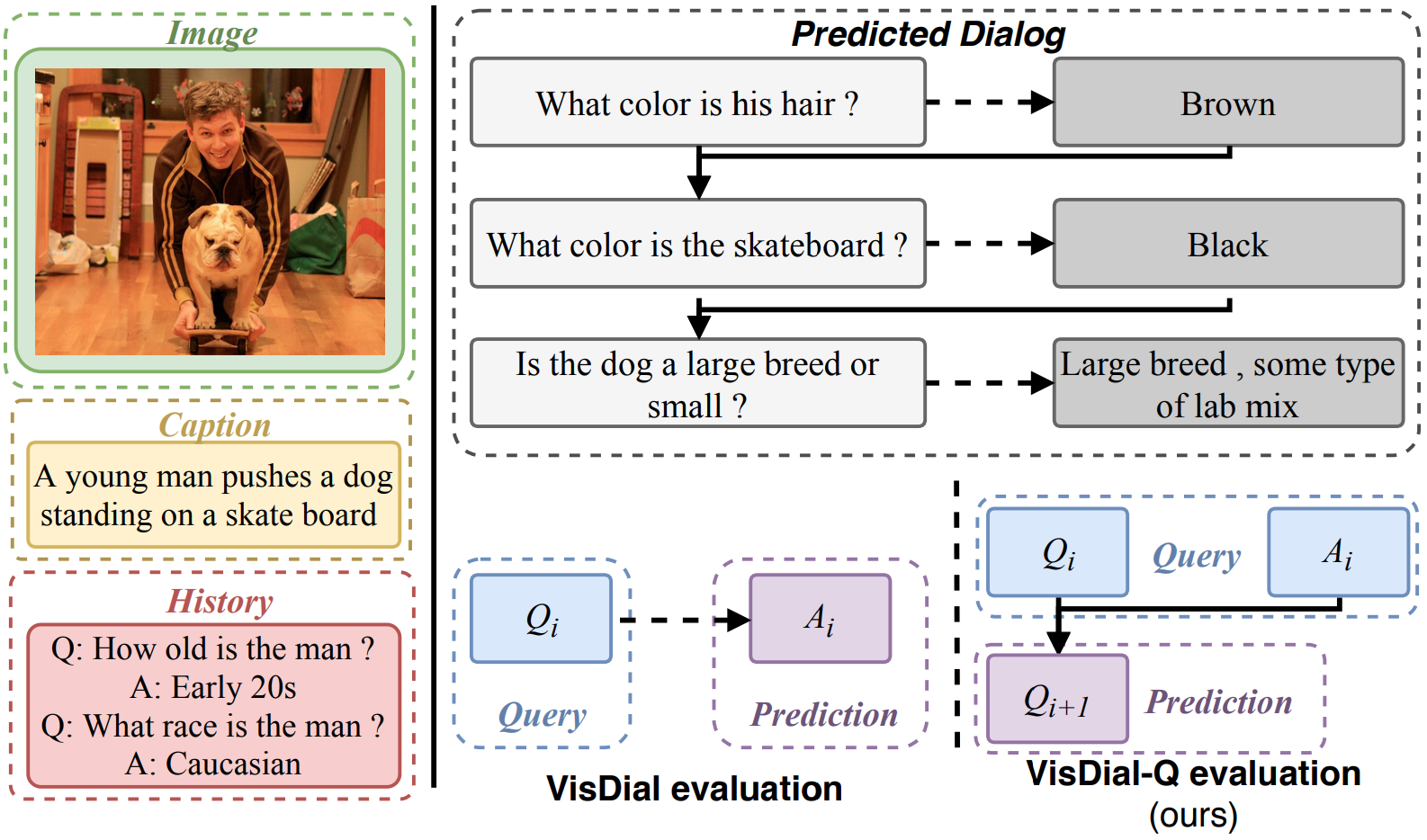

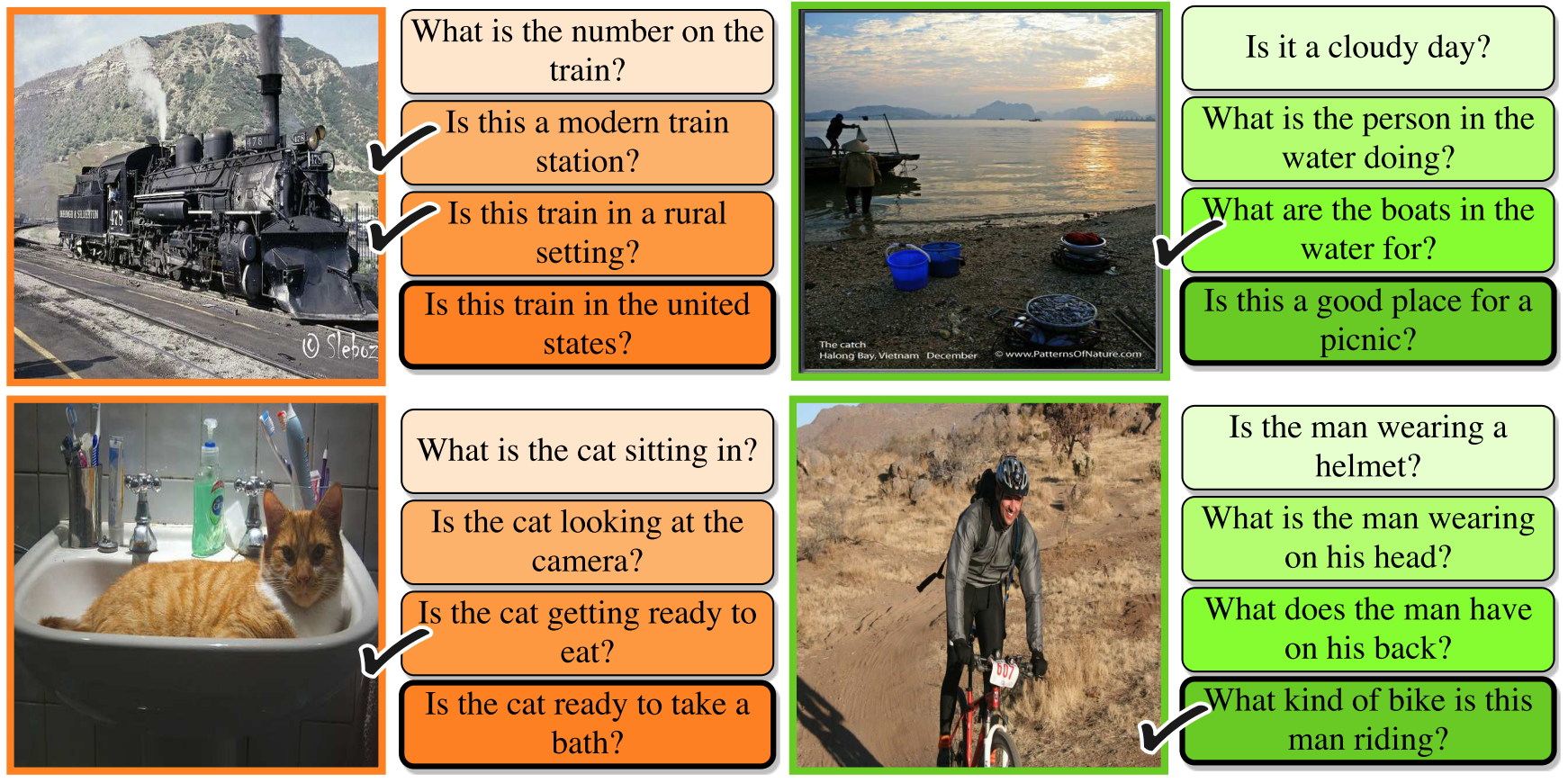

Two can play this Game: Visual Dialog with Discriminative Question Generation and Answering

Unnat Jain, Svetlana Lazebnik, Alexander Schwing

CVPR 2018

|

|

|

Creativity: Generating Diverse Questions using Variational Autoencoders

Unnat Jain*, Ziyu Zhang*, Alexander Schwing

CVPR 2017 (spotlight)

video |

paper

|

|

|

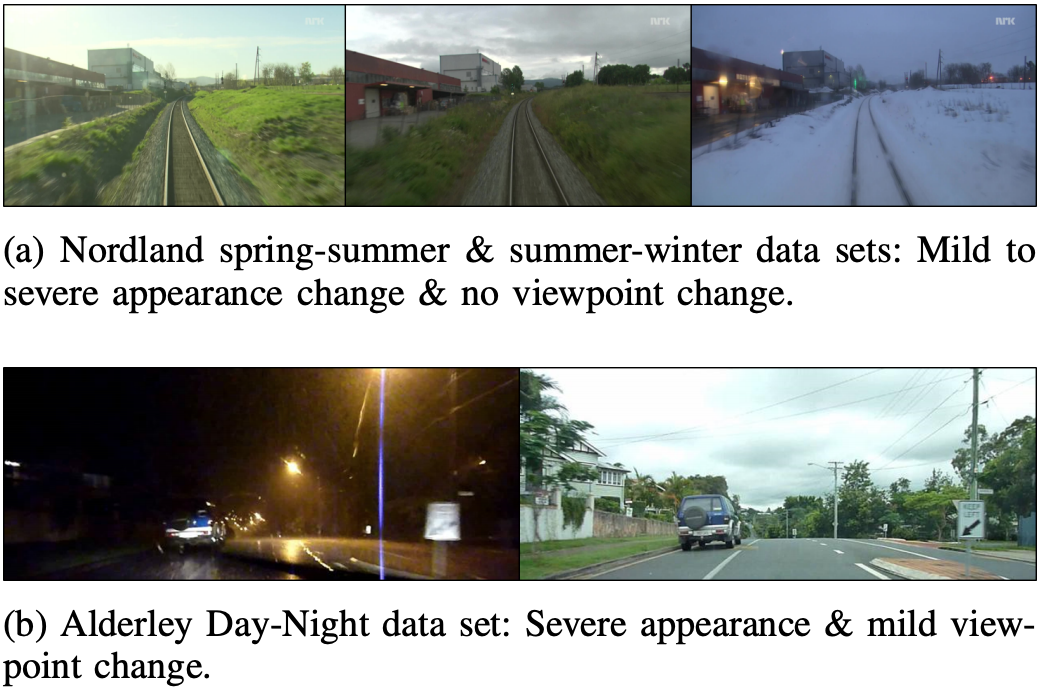

Compact Environment-Invariant Codes for Robust Visual Place Recognition

Unnat Jain, Vinay Namboodiri, Gaurav Pandey

Conference on Computer and Robot Vision (CRV) 2017

|

|

![[NEW]](./unnat_jain_files/new.png)