Unnat Jain

|

I am an Assistant Professor of Computer Science at the University of California, Irvine.

Toward building general-purpose embodied intelligence, my research focuses on the intersection of computer vision (perception) and robot learning (action). I have worked across industry, academia, and startups at Meta's Fundamental AI Research (FAIR) Labs, Carnegie Mellon University, and Skild AI, collaborating with Abhinav Gupta, Deepak Pathak, and Xinlei Chen. I received my PhD from UIUC, advised by Alex Schwing and Svetlana Lazebnik, and previously graduated from IIT Kanpur. Helpful links for applicants: UC Irvine offers what I believe are important conditions for focused research: an active AI/ML intellectual community, research infrastructure to support ambitious projects, guaranteed affordable housing for all years (a rarity in California), and a safe environment with year-round access to nature. The campus has established pathways to real-world impact, and proximity to Southern California's AI and robotics industry creates opportunities for meaningful collaborations and diverse career paths. The deadline for PhD applications is December 15, 2025. For opportunities to work with me, see my UCI profile. CV | E-Mail | Google Scholar | Github | UCI Profile | Twitter Follow @unnatjain2010 |

|

People |

|

Our research spans vision-language-action models, human-to-robot learning, sim-to-real transfer, pre-training strategies for embodied agents, and multi-agent collaboration. We're building systems that enable robots to learn from diverse data sources and work together on complex real-world tasks.

I am extremely fortunate to work with an amazing set of students and collaborators. I'm deeply grateful for their support. We are continuing to grow our group. Dwip Dalal Daniel Feng Aditya Mittal Sagar Patil Shivansh Patel Yuchen Song |

Affiliations |

|

|

|

|

|

| IIT Kanpur 2011-2016 |

UIUC 2016-2022 |

CMU & Meta 2022-2024 |

Skild AI 2024-2025 |

UC Irvine 2025- |

Internships |

|

|

|

|

|

| UMass Amherst Summer 2015 |

Uber ATG Summer 2017 |

Allen Institute for AI Summer 2018, 2020 |

FAIR (collab w/ UT Austin) Summer 2019, FA19, SP20 |

Google DeepMind Summer 2021, FA21 |

Updates |

| Area Chairing | Currently: CVPR 2026, ICLR 2026, NeurIPS 2025 CoRL 2025; CVPR 2023, 2024, 2025; ICCV 2025; ICLR 2025; NeurIPS 2023, 2024 |

| Jan 2026 | Invited talk at UCSD, on Controlling VLMs for Robot Control. |

| Dec 2025 | ViPRA got best paper award at NeurIPS 2025 Embodied World Models Workshop. Congrats, Sandeep & team. |

| Oct 2025 | First quarter at UC Irvine as an Assistant Professor in Computer Science. |

| Nov 2024 | For those on the faculty job search, here is a note I wrote: Hidden Curriculum of Faculty Job Search |

| June 2024 | Organizing the community-building workshop 'CV 20/20: A Retrospective Vision' at CVPR 2024. |

| June 2024 | Accepted faculty position at UC Irvine. Spending the next year at Skild AI and will start in 2025. |

| Jun 2023 | Organizing the 'Scholars & Big Models: How Can Academics Adapt?' workshop at CVPR 2023. |

| Dec 2022 | Organizing the RoboAdapt Workshop at CoRL 2023. |

Publications |

|

|

![[NEW]](./unnat_jain_files/new.png)

Sandeep Routray, Hengkai Pan, Unnat Jain, Shikhar Bahl, Deepak Pathak ICLR 2026 Best Paper Award at NeurIPS 2025 Embodied World Models Workshop project | paper | code |

|

![[NEW]](./unnat_jain_files/new.png)

Dwip Dalal, Gautam Vashishtha, Utkarsh Mishra, Jeonghwan Kim, Madhav Kanda, Hyeonjeong Ha, Svetlana Lazebnik, Heng Ji, Unnat Jain ICLR 2026 paper | project | code |

|

![[NEW]](./unnat_jain_files/new.png)

Shivansh Patel, Shraddhaa Mohan, Hanlin Mai, Unnat Jain*, Svetlana Lazebnik*, Yunzhu Li* ICLR 2026 paper | project | code |

|

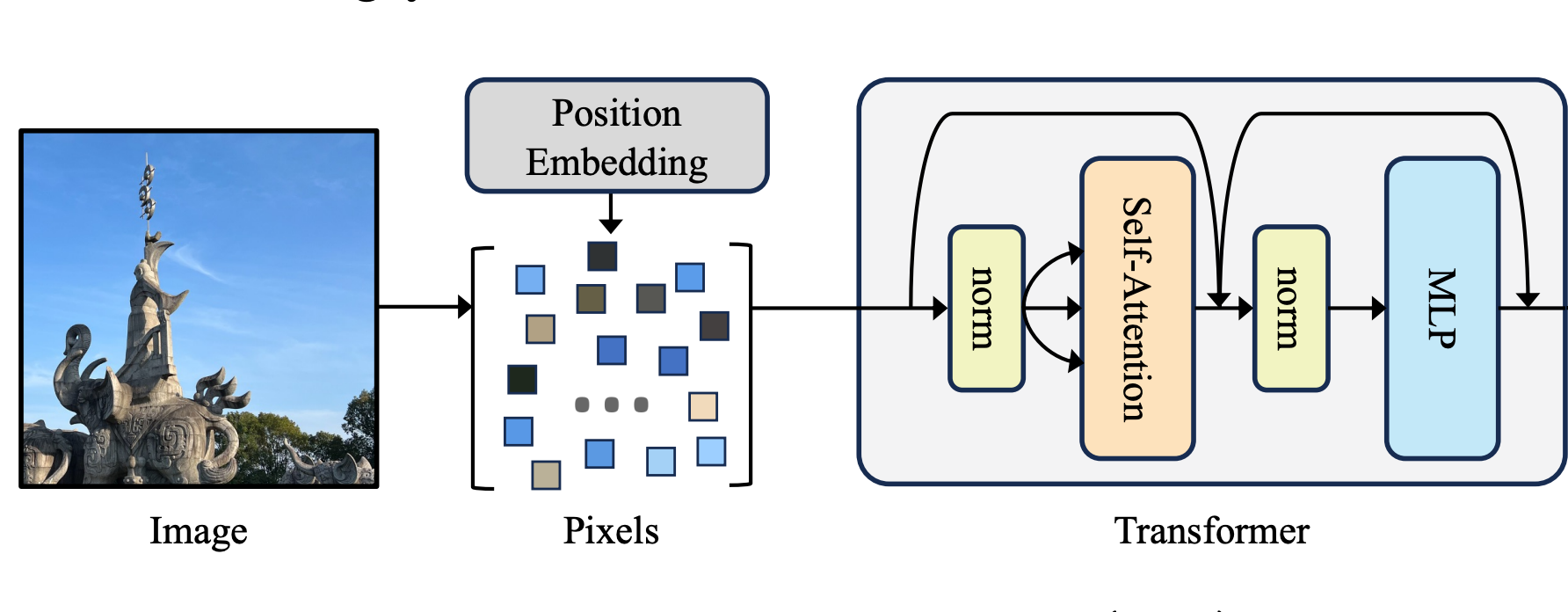

Duy-Kien Nguyen, Mahmoud Assran, Unnat Jain, Martin R Oswald, Cees GM Snoek, Xinlei Chen ICLR 2025 paper |

|

|

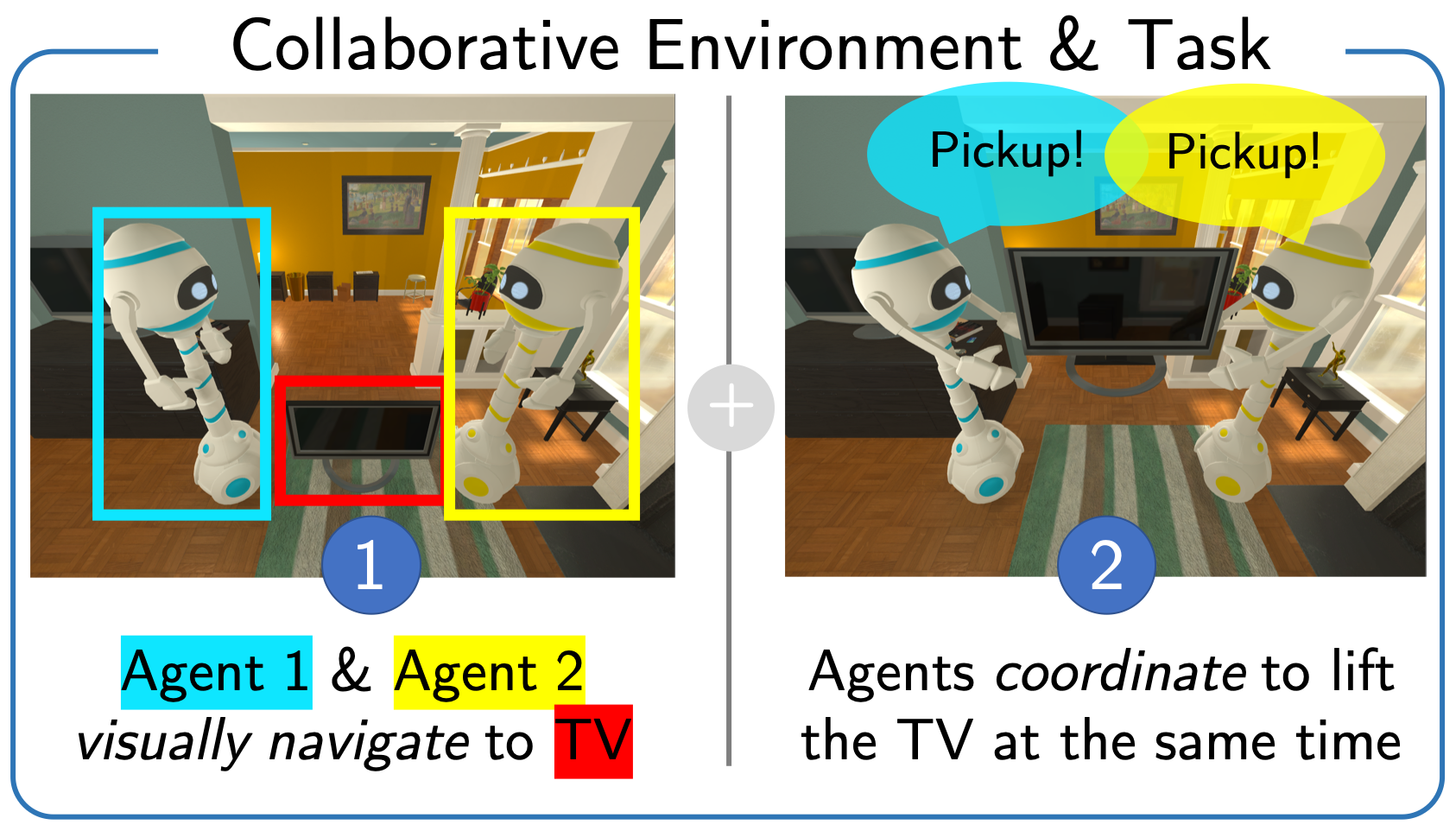

Justin Wasserman, Girish Chowdhary, Abhinav Gupta, Unnat Jain ICRA 2024 Best Paper at NeurIPS 2023 Robot Learning Workshop paper | project | code |

|

|

Xavi Puig*, Eric Undersander*, Andrew Szot*, Mikael Cote*, Ruslan Partsey*, Jimmy Yang*, Ruta Desai*, Alexander Clegg*, Michal Hlavac, Tiffany Min, Theo Gervet, Vladimír Vondruš, Vincent-Pierre Berges, John Turner, Oleksandr Maksymets, Zsolt Kira, Mrinal Kalakrishnan, Jitendra Malik, Devendra Chaplot, Unnat Jain, Dhruv Batra, Akshara Rai**, Roozbeh Mottaghi** ICLR 2024 paper | project | code Media: |

|

Sudeep Dasari, Mohan Kumar Srirama, Unnat Jain*, Abhinav Gupta* CoRL 2023 project | pdf |

|

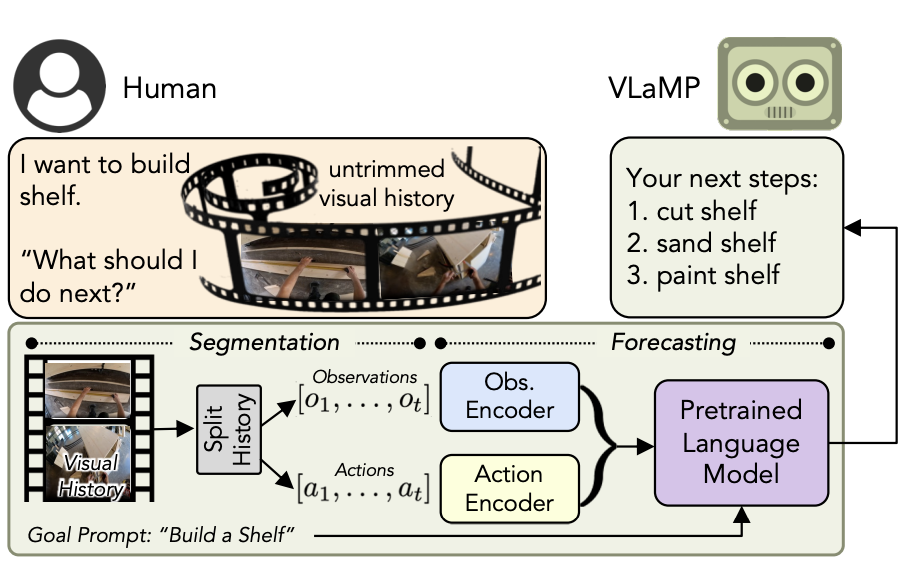

Dhruvesh Patel, Hamid Eghbalzadeh, Nitin Kamra, Michael Louis Iuzzolino, Unnat Jain*, Ruta Desai* ICCV 2023 paper | code |

|

Andrew Szot, Unnat Jain, Dhruv Batra, Zsolt Kira, Ruta Desai, Akshara Rai ICML 2023 paper | code |

|

Shikhar Bahl*, Russell Mendonca*, Lili Chen, Unnat Jain, Deepak Pathak CVPR 2023 paper | project Media: |

|

Sonia Raychaudhuri, Tommaso Campari, Unnat Jain, Manolis Savva, Angel X. Chang WACV 2024 paper | project | code |

|

Justin Wasserman*, Karmesh Yadav, Girish Chowdhary, Abhinav Gupta, Unnat Jain* CoRL 2022 paper | project | code |

|

Matt Deitke, Dhruv Batra, Yonatan Bisk, ... Unnat Jain ... Luca Weihs, Jiajun Wu arXiv 2022 paper |

|

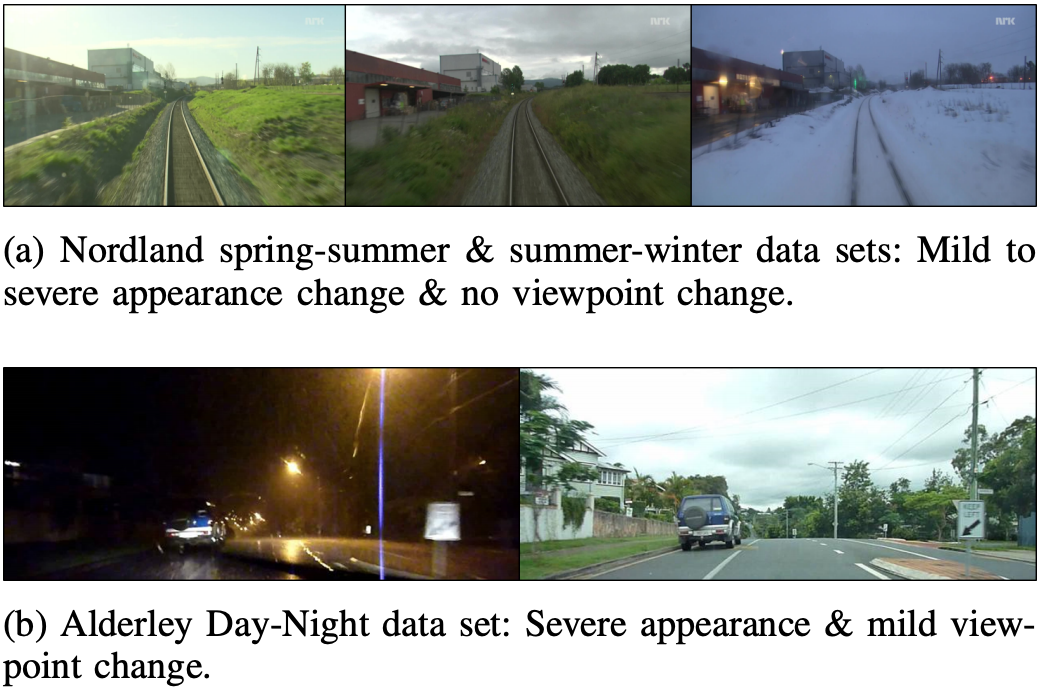

Himangi Mittal, Pedro Morgado, Unnat Jain, Abhinav Gupta NeurIPS 2022 paper | code |

|

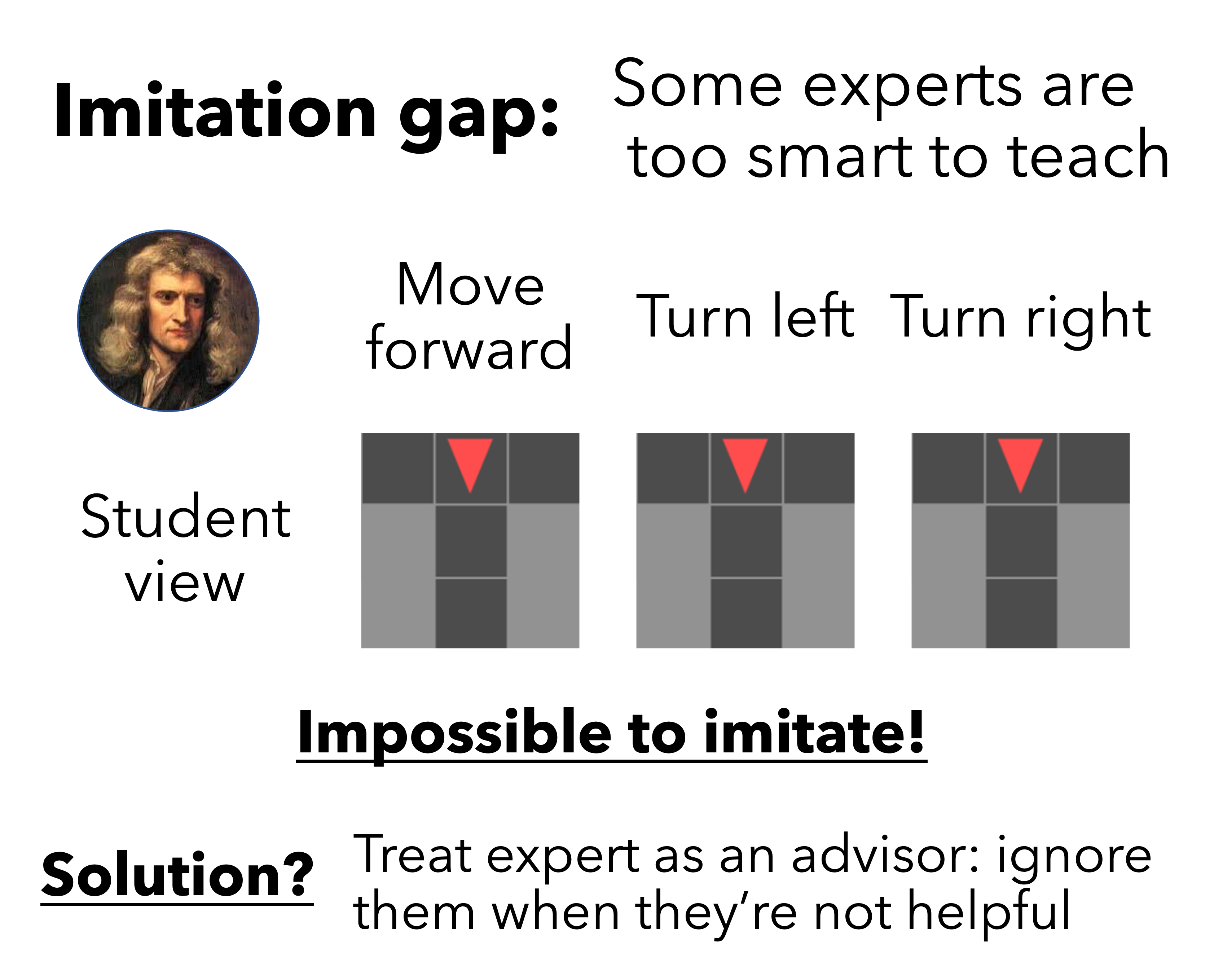

Luca Weihs*, Unnat Jain*, Iou-Jen Liu, Jordi Salvador, Svetlana Lazebnik, Aniruddha Kembhavi, Alexander Schwing NeurIPS 2021 paper | project | code |

|

Sonia Raychaudhuri, Saim Wani, Shivansh Patel, Unnat Jain, Angel X. Chang EMNLP 2021 (short) paper | project | code |

|

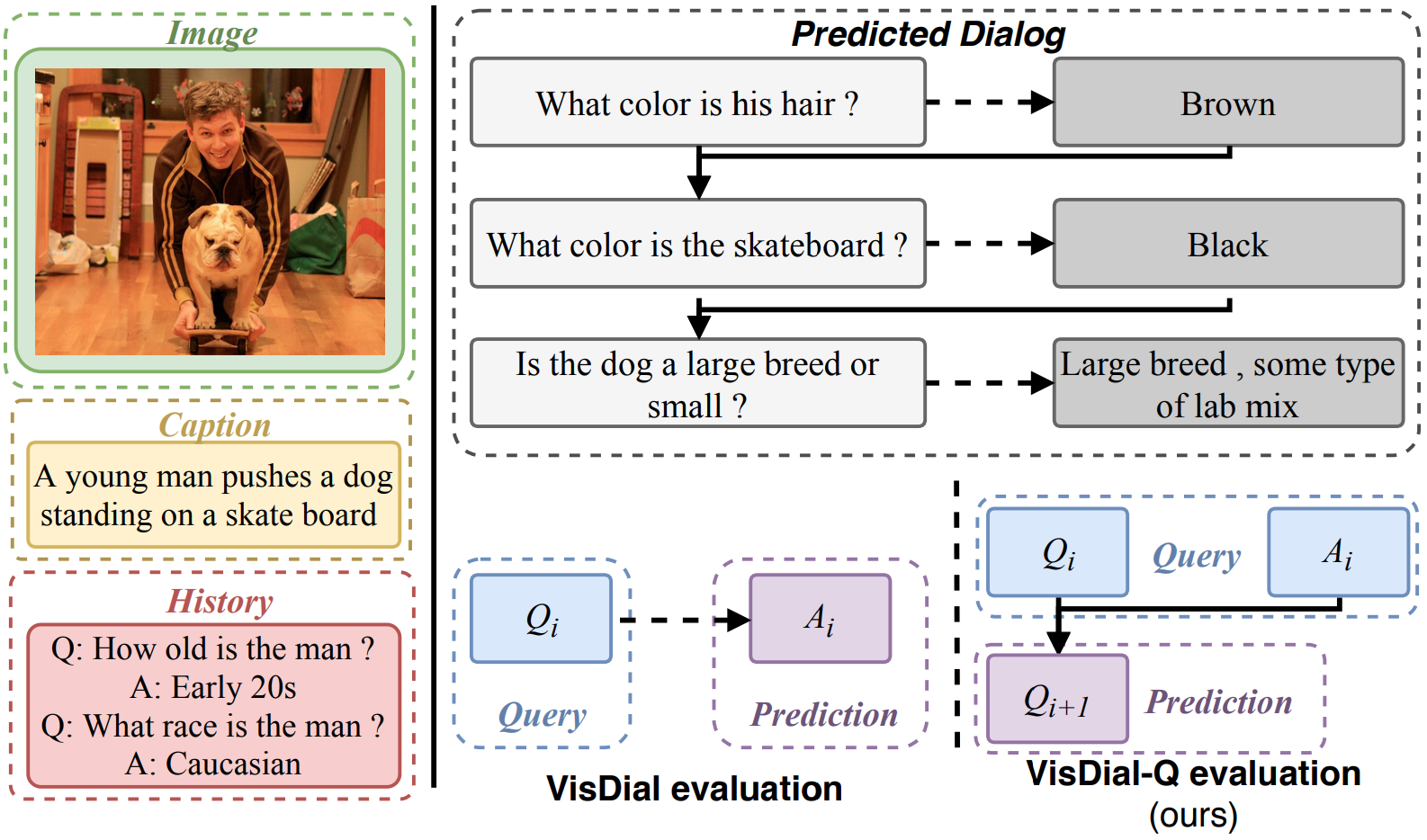

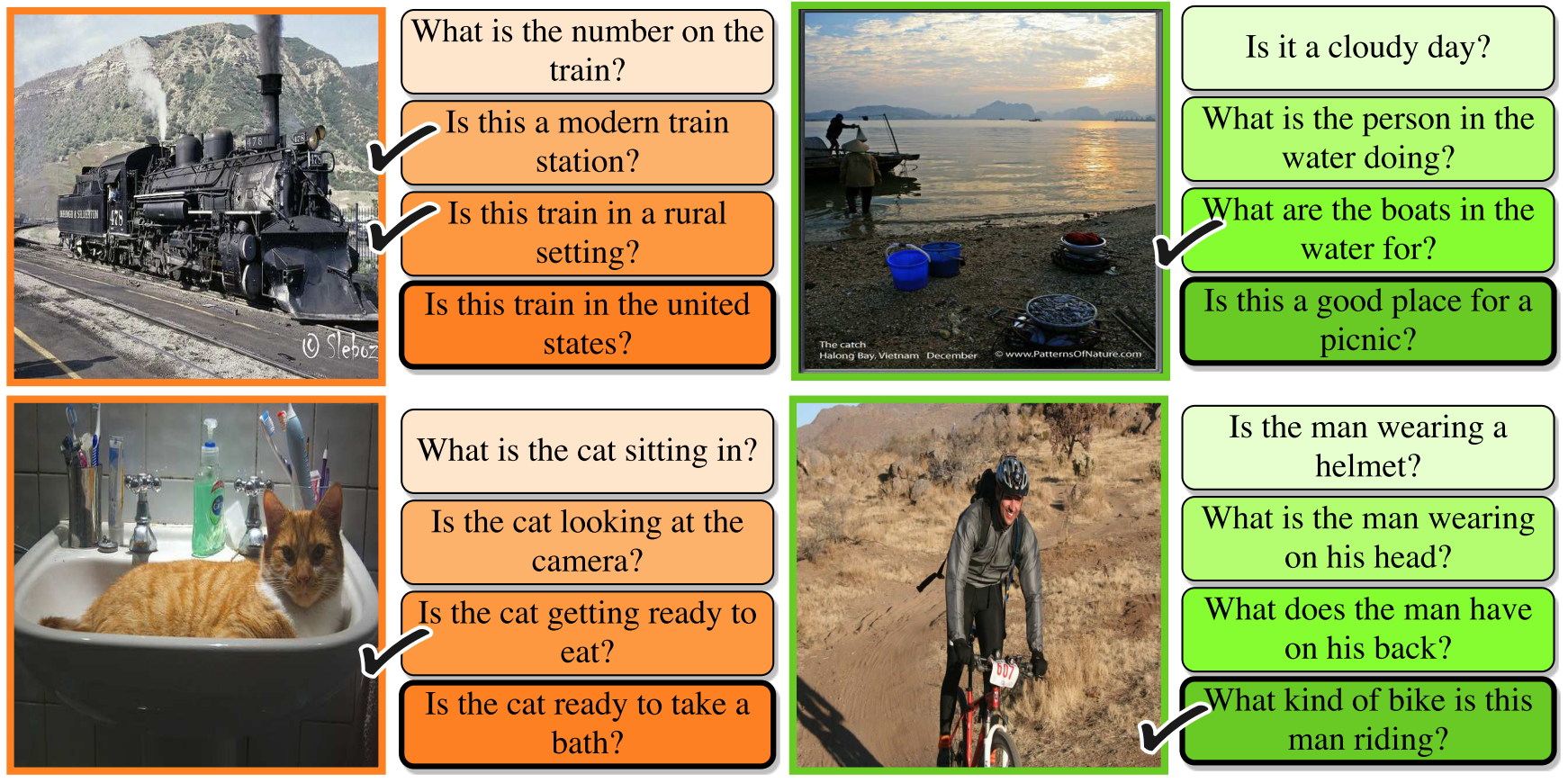

Unnat Jain, Iou-Jen Liu, Svetlana Lazebnik, Aniruddha Kembhavi, Luca Weihs*, Alexander Schwing* ICCV 2021 paper | project |

|

Shivansh Patel*, Saim Wani*, Unnat Jain*, Alexander Schwing, Svetlana Lazebnik, Manolis Savva, Angel X. Chang ICCV 2021 paper | project | code |

|

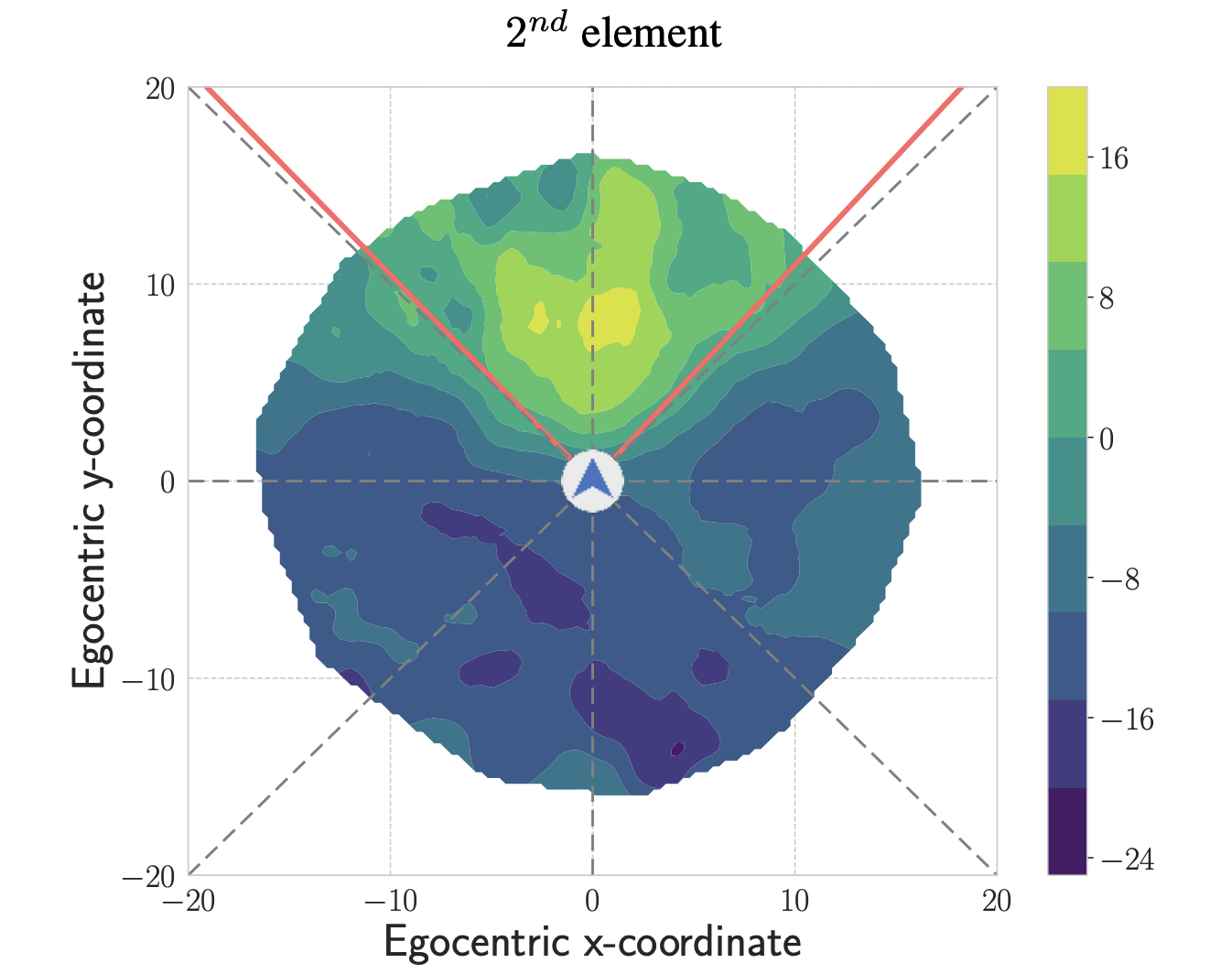

Iou-Jen Liu, Unnat Jain, Raymond Yeh, Alexander Schwing ICML 2021 (long oral) paper | project | code |

|

Saim Wani*, Shivansh Patel*, Unnat Jain*, Angel X. Chang, Manolis Savva NeurIPS 2020 paper | project | code | challenge |

|

Luca Weihs*, Jordi Salvador*, Klemen Kotar*, Unnat Jain, Kuo-Hao Zeng, Roozbeh Mottaghi, Aniruddha Kembhavi arXiv 2020 paper | project | code Media: |

|

Unnat Jain*, Luca Weihs*, Eric Kolve, Ali Farhadi, Svetlana Lazebnik, Aniruddha Kembhavi, Alexander Schwing ECCV 2020 (spotlight) paper | project | code |

|

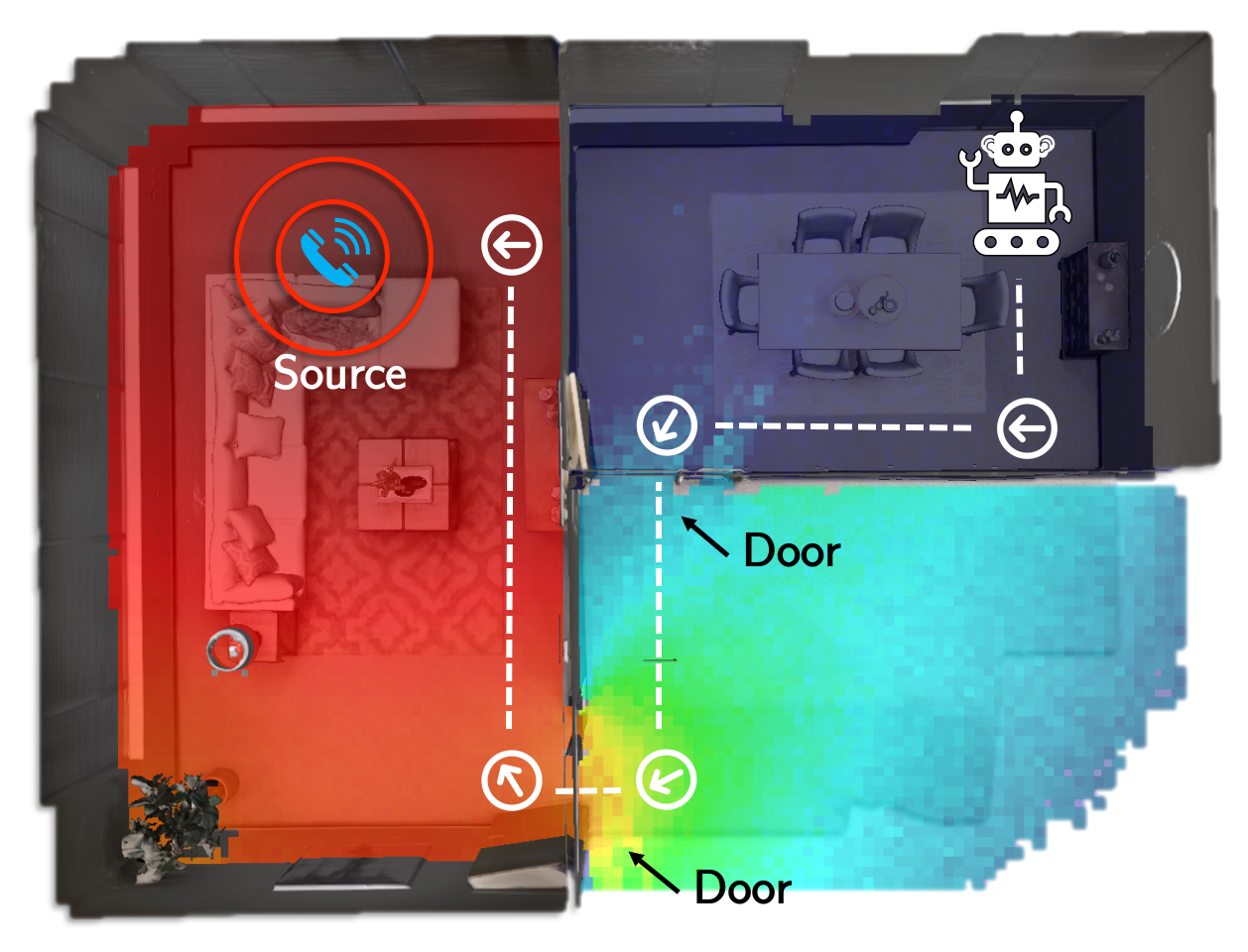

Changan Chen*, Unnat Jain*, Carl Schissler, Sebastia Vicenc Amengual Gari, Ziad Al-Halah, Vamsi Krishna Ithapu, Philip Robinson, Kristen Grauman ECCV 2020 (spotlight) paper | project | code | challenge Media: |

|

Jingxiang Lin, Unnat Jain, Alexander Schwing NeurIPS 2019 paper | project | code |

|

Unnat Jain*, Luca Weihs*, Eric Kolve, Mohammad Rastegari, Svetlana Lazebnik, Ali Farhadi, Alexander Schwing, Aniruddha Kembhavi CVPR 2019 (oral) paper | project | code Talk @ Amazon: video, ppt, pdf Talk @ CVPR'19: video, ppt, pdf, poster |

|

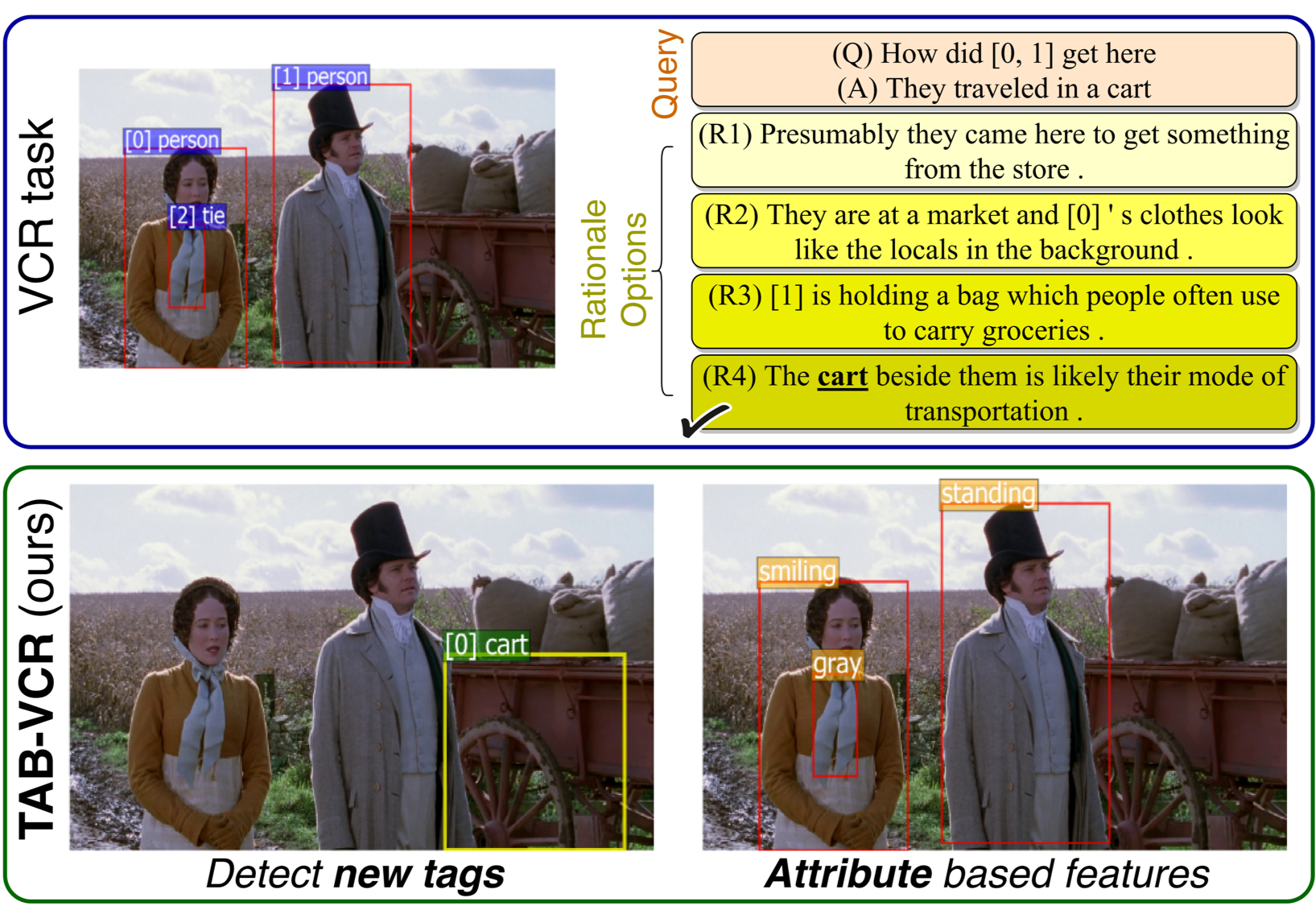

Unnat Jain, Svetlana Lazebnik, Alexander Schwing CVPR 2018 |

|

Unnat Jain*, Ziyu Zhang*, Alexander Schwing CVPR 2017 (spotlight) video | paper |

|

Unnat Jain, Vinay Namboodiri, Gaurav Pandey Conference on Computer and Robot Vision (CRV) 2017 |

|

|